Part 1: How to build a mobile app as a product person (stop prompting, start planning).

Cursor helped me build a chatbot and ML scanner in days. Knowing what NOT to ship took longer.

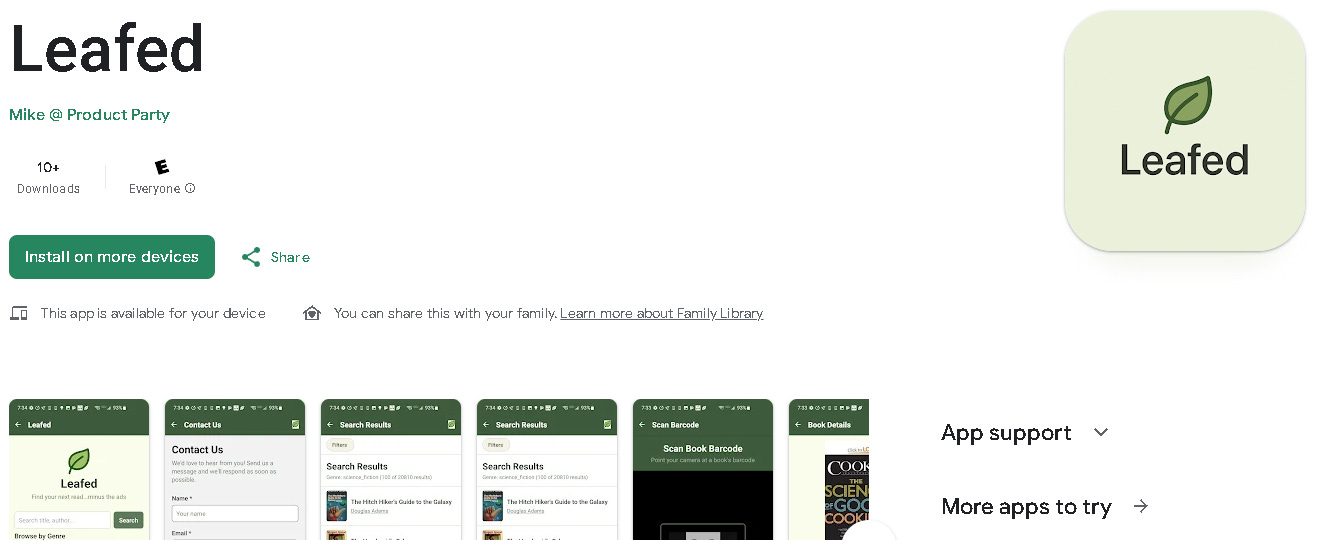

I set a bunch of goals in 2025 but the one I didn’t expect to hit: build a mobile app and get it into production. Not a prototype living on my laptop. An actual app in the Play Store that real people could download.

The motivation was practical. If you’re doing product work in 2025, understanding mobile isn’t optional. You need to know what’s hard, what’s expensive, where the real friction lives. The only way to learn is to ship something.

So I built Leafed, a book search engine using free APIs from a GitHub list. Nothing revolutionary. That was the point.

Coding wasn’t the hard part. Building ambitious features wasn’t hard either. The surprisingly hard part? Knowing which features NOT to ship.

Stop asking for features, start asking for plans.

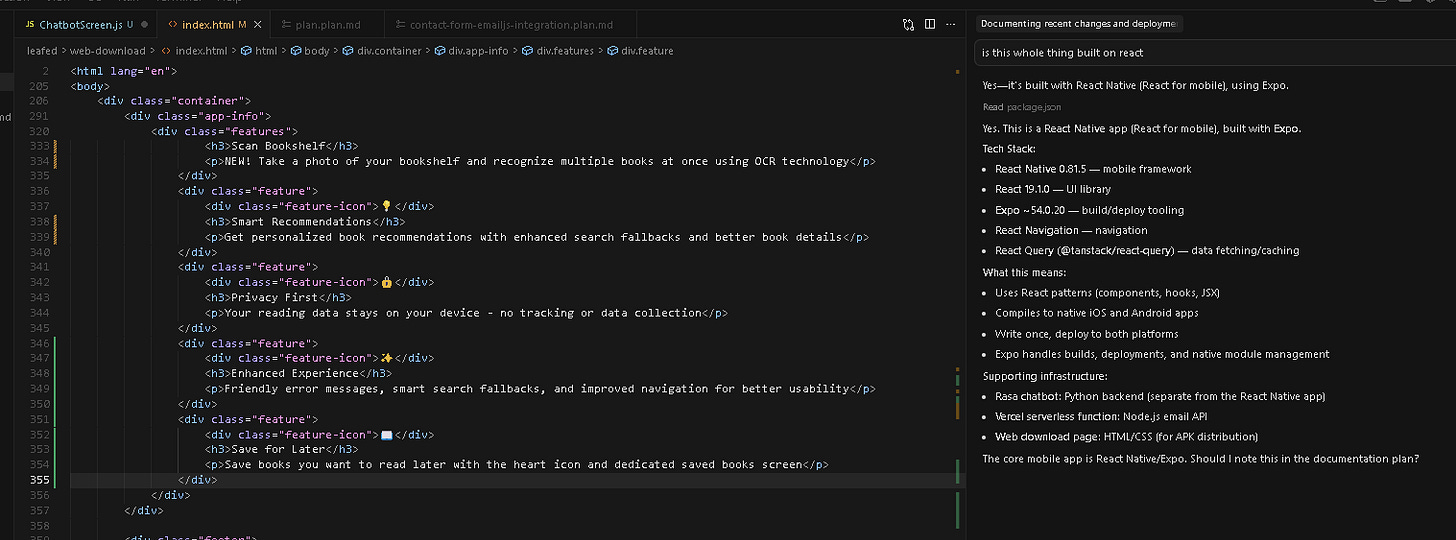

I’ve been writing about “vibe coding” for months. Using AI tools to build faster than traditional development. Cursor IDE became my go-to because it’s not just autocomplete. It’s a development “partner” that architects, debugs, and iterates with you.

My pattern was simple: ask Cursor to build a feature, review it, ask for the next one, iterate. Ship when it looks good.

That works for side projects. But for the Play Store? I wasn’t ready.

I would say even though the feature has been there for a while, my biggest breakthrough came when I started using Cursor’s Agent “Plan” feature.

Instead of: “Build me a book search feature.”

I asked: “Create a comprehensive launch checklist for getting an Android app from development through Play Store production, including all technical requirements, testing phases, and compliance steps.”

Right off the rip, the results that came back were way better than I had anticipated.

A 12-phase roadmap from environment setup to post-launch monitoring. Feature flag systems for controlling what ships. Automated deployment scripts for pushing APKs to my own domain. Build configurations for local testing and app store submissions.

That checklist became my project plan. Not a reference document. The actual plan I followed from day one.

The Cursor Agent “Plan” feature became essential throughout the project. I used it to workshop new features, debug issues, and even write documentation about my codebase for app submission. It’s like having a senior developer who can see your entire project and help you think through decisions.

This is the shift most people miss: Stop treating AI tools like better documentation. Start treating them like experienced advisors who’ve shipped a hundred apps before you.

Ambitious features I built (that nobody will ever see).

With a comprehensive plan and feature flags in place, I got ambitious. Why just search for books when you could build something impressive?

I know there are some pretty great products such as Goodreads that cover the book space well but I thought there might be a couple things I could learn from trying to build from scratch/add to make things interesting.

The first was a conversational AI chatbot to help users discover books through natural dialogue. I built two versions:

Rasa chatbot (open-source, locally hosted)

Hybrid AI using Google Gemini with rule-based fallback

Both worked. Both were technically solid. Both got turned off before submission.

The Rasa model was too heavy for mobile. The performance hit wasn’t worth it. The Gemini hybrid was better, but in testing, people just wanted to search or scan. Not chat. The fancy solution lost to the simple one.

The second feature was inspired by my favorite wine tracking app, Vivino, and was a bookshelf scanner using Google’s ML Kit OCR to scan multiple books at once. I built it and found that the OCR extracted text from book spines beautifully.

But book covers are wildly inconsistent. Different fonts, decorative layouts, text placements that confuse recognition patterns. A better AI setup might have solved it (Amazon’s scanner proves it’s possible), but I wasn’t investing more money to experiment with premium OCR services.

So I turned it off too.

At the end of the day, I’ve realized that the best decision was to implement the feature flag system, which let me build ambitiously, test honestly, and cut intelligently. That’s impossible when you’re prompting incrementally. You need the architecture first.

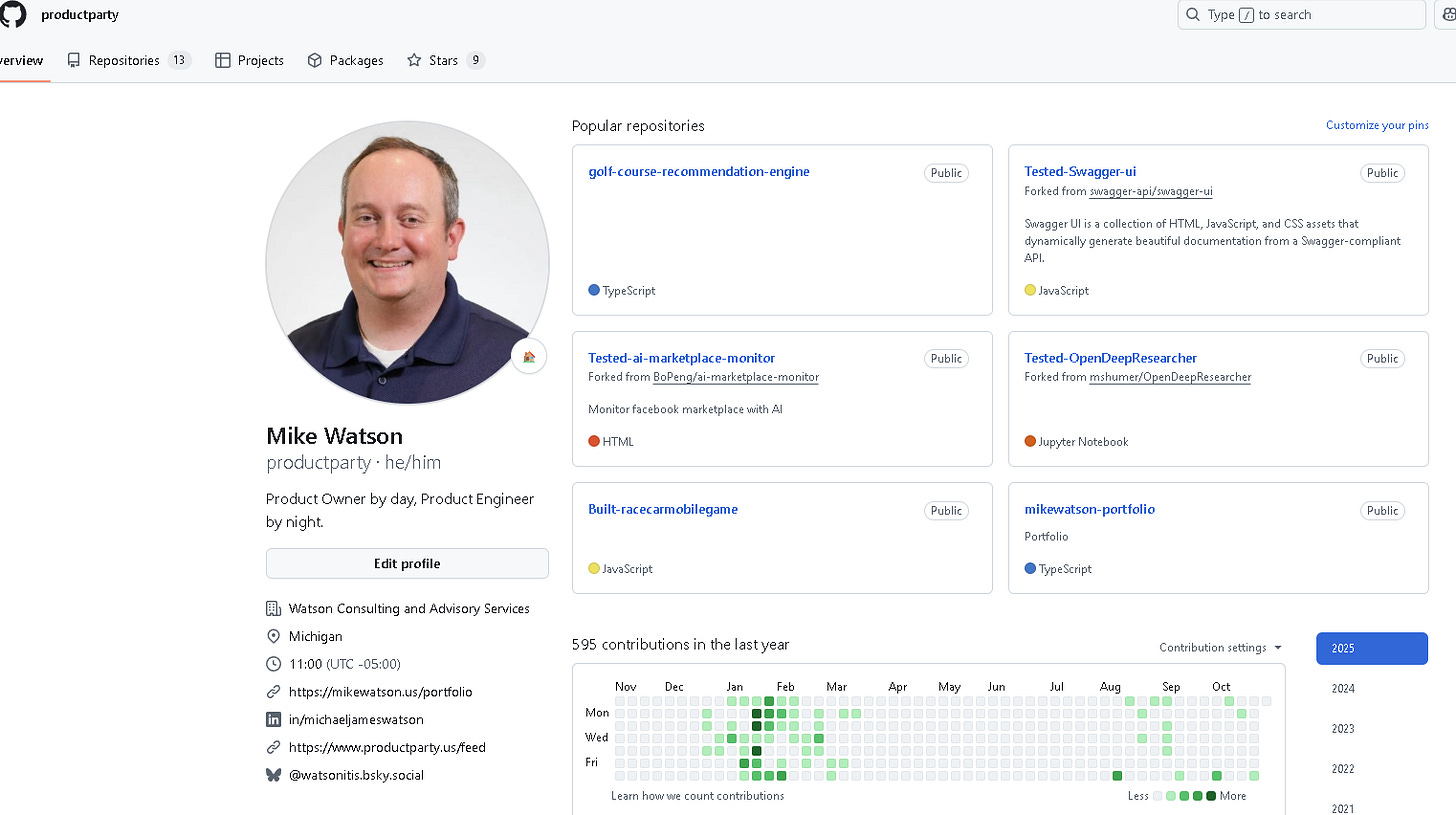

GitHub’s buried treasures.

Once you start looking, you realize the internet is full of free tools solving real problems. You just have to dig.

I found Google’s ML Kit Vision APIs while building that bookshelf scanner. Even though the full scanner failed, the barcode scanning component became Leafed’s primary feature. Functionality that would have cost serious money five years ago? Now it’s a few API calls.

Then there’s GitHub. Not the polished repositories on trending pages. The ones buried six pages deep when you search “Android book scanner” or “mobile OCR implementation.” Projects from developers who solved one specific problem and left their solution public.

I found a barcode implementation that saved days of work. Camera integration patterns handling edge cases I hadn’t considered. Components that were 80% of what I needed and fully customizable.

Building in 2025 isn’t starting from scratch. It’s remixing. Finding pieces that work, understanding what they do, assembling something new.

How React Native and Expo made my dev life easy.

I didn’t build separate apps for Android and Apple. I used React Native with Expo, which turned out to be one of the smartest decisions I made.

React Native let me write once and deploy to both platforms. No maintaining two codebases. No learning Swift and Kotlin. Just JavaScript and the components I already understood.

But Expo was the real game-changer.

It gave me:

1) A web-based testing environment as a first step before building any native apps. I could test core functionality in a browser, iterate quickly, then push to mobile when ready.

2) Automated build infrastructure through EAS (Expo Application Services). Instead of wrestling with Xcode and Android Studio locally, I could trigger cloud builds that handled all the platform-specific complexity.

3) Easy APK generation for testing. I could build, download, and push APKs to my domain for real device testing without going through the stores.

4) TestFlight integration for Apple. Once I was ready for iOS testing, Expo handled the provisioning profiles and certificates that usually make developers want to quit.

This workflow meant I could test on web first, push APKs to mikewatson.us/leafed for Android testing, and use TestFlight for iOS testing. All from the same codebase.

Becoming my own distributor.

Before the Play Store would look at Leafed, I needed real device testing. Not emulator testing. Real people, real phones, real usage.

I set up hosting at mikewatson.us/leafed and started pushing APKs there. Built a download page with installation instructions. Added version tracking. Sent links to friends and family.

This taught me more than I expected. Watching someone toggle their Android security settings to “allow unknown sources” teaches you friction the documentation never mentions.

It’s also essentially free (minus domain costs I already had). And it gave me an automated workflow: build locally, test on device, push to web, distribute link. The entire cycle in minutes.

Real costs (it’s not free, but it’s reasonable).

Let’s be honest about money:

Cursor Pro: $20/month for comprehensive planning and code generation.

Expo entry-level plan: Required for EAS builds. I paid $19 for the starter plan.

Play Store submissions Google Play developer account: $25 one-time fee.

Apple Developer Program: $99/year (if you want iOS too).

Domain hosting: Already had mikewatson.us, minimal additional cost.

Testing service: $15 (more on this next week)

Total for Android: Around $75 upfront, plus subscriptions if you keep using them.

Add Apple and you’re looking at closer to $175 in the first year.

Not “free.” Also not “hire a development team” money. The democratization of app development is real. But be honest about costs.

I’m hoping a few Amazon affiliate link clicks and Buy Me a Coffee posts might offset some of these costs. If not? It was worth it for the education.

What can you use tomorrow?

You don’t need to build an app to apply this learning:

Stop prompting AI tools incrementally. Start by asking for the comprehensive plan first.

Whether you’re planning a product feature, mapping a user flow, or architecting a system, treat these tools like experienced advisors who see the whole picture. Not code completion engines.

Use Cursor’s Agent “Plan” feature. Ask for the checklist. Ask for the deployment strategy. Ask for the feature flag system that lets you build ambitious and cut intelligently.

The tools exist. Cursor can help you build a mobile app without writing every line. React Native and Expo let you target multiple platforms from one codebase. Google offers ML capabilities as free APIs. GitHub is full of solutions to problems you think are hard.

The barrier isn’t technical skill. It’s understanding the full process and being willing to build big, test honestly, and ship smart.

What’s next?

Right now, I’m on day 13 of Google’s closed testing requirement. The app is working. Testers are engaged. The 14-day countdown is almost over.

Next week, I’ll walk you through what happens when you actually try to submit to both platforms: the testing requirements, the essay questions, and what “production ready” actually means according to Google and Apple.

Part 2 drops next Tuesday: After I’ve submitted production requests to the Play Store and started the Apple review process.

Until next week,

Mike @ Product Party

Want to connect? Send me a message on LinkedIn, Bluesky, Threads, or Instagram.

P.S. If you’re planning a project like this, check out ClickUp for managing your build process. Great for tracking multi-phase projects with lots of moving pieces.