I trained an AI on my career so hiring managers don't have to guess.

How I built an AI that represents me better than my resume ever could.

I drove e-notary adoption from near-zero to a 3,000% increase within a homegrown CRM tool for an auto refinance business. That sentence took me four seconds to type. It takes a recruiter about two seconds to read.

In those two seconds, they learn almost nothing about what actually happened. They don’t know that the internal teams were barely using the system when I walked in. They don’t know about the months of figuring out why adoption was stalling, rebuilding workflows, and getting people to trust a process they’d already written off.

A bullet point is a compression algorithm. And like all compression, it’s lossy. The nuance, the judgment calls, the failures that led to the breakthroughs. Gone.

I’ve been exploring what’s next in my career, and the more I engage with the process, the more I see how the whole system runs on a format that can’t represent what experienced product people actually bring to the table.

250 Applications. 6 Interviews.

The average job posting receives up to 250 applications. Only 4 to 6 of those applicants get invited to interview. Roughly 88% of companies use AI for initial screening, and 40% of applications get filtered out before a human ever reads them.

Product School counted over 26,000 open product roles on LinkedIn each week at the start of 2025. Roles exist. But the funnel between “qualified candidate” and “actual conversation” is broken. If you’ve been doing this for 10+ years, you already know: interviews are where you win. You just have to get there first.

So I Built Something

Nate B. Jones, one of my favorite product and AI creators (shout out Nate), put out a video recently about building a portfolio that actually represents you. The question I kept circling was: how do I make someone actually want to spend time on my portfolio? How do I make the interaction feel like talking to me?

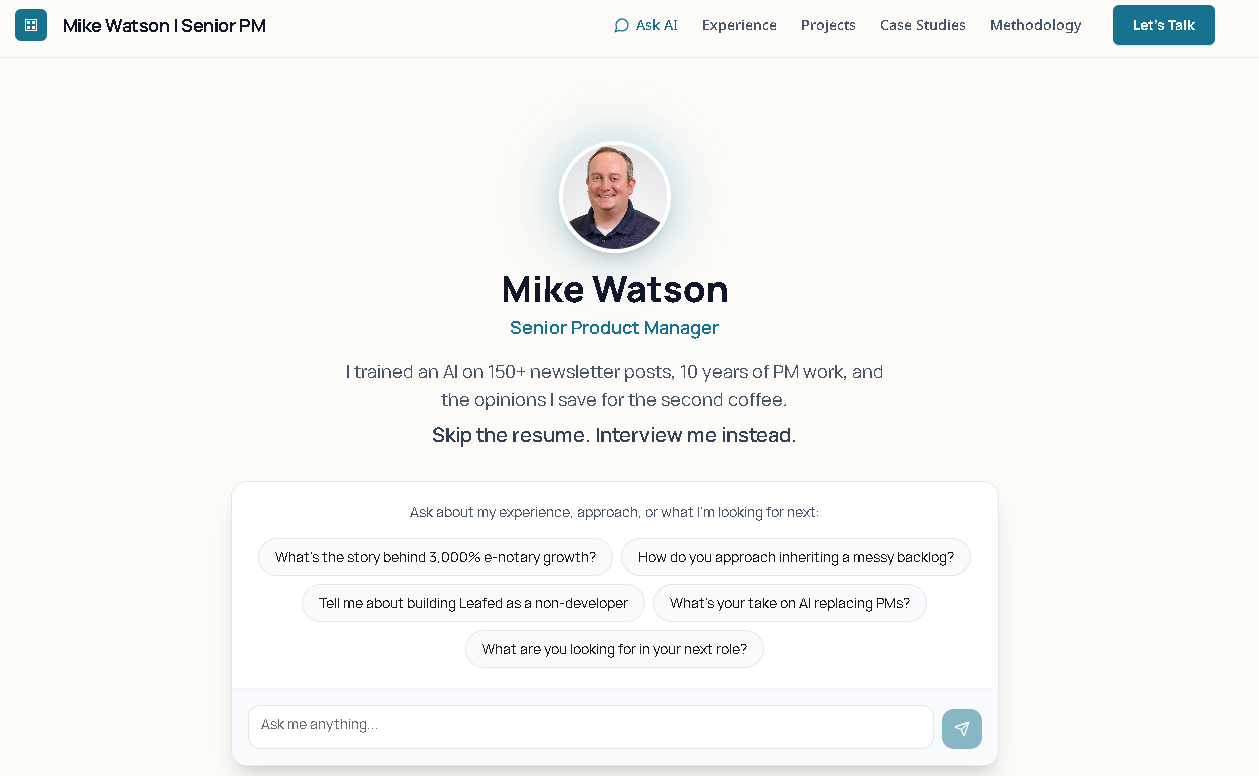

So I built an AI assistant for my portfolio site. Not a generic chatbot. An AI trained on my actual voice, my real career history, and my honest opinions. Something that lets a hiring manager ask “What was actually hard about that e-notary project?” and get an answer that sounds like me. Because it’s built by me.

Exporting My Brain Into 38 Markdown Files

A chatbot is only as good as what it knows. And “what it knows” isn’t a resume.

I built a knowledge corpus of 38 markdown files across six categories. Professional narratives. Thought leadership distilled from 150+ Product Party posts. My builder portfolio. Frameworks and opinions. Practical FAQ. Voice calibration documents.

I used Claude to interview me about each role. One question at a time. I answered using speech-to-text in Word, then copy-pasted my responses into the conversation. Claude pushed back when my answers were vague. It asked follow-ups I wouldn’t have thought of. Then it synthesized each conversation into a structured narrative.

It felt like a real interview. The whole corpus took a couple of hours because I’d already done the work. Two years of writing, project documentation, and a voice guide built for this newsletter. If you’ve been writing, building, and documenting your work, you already have the raw material. You just haven’t assembled it yet.

Voice, Guardrails, and 45 Tests

Most portfolio chatbots hedge everything. No personality. No opinions. They sound like every other AI on the internet because they are every other AI on the internet.

My system prompt specifies: lead with failures before frameworks. Have actual opinions. When someone asks, “Should I become a product person?”, be honest about what the job actually looks like day to day. No chat bubble popups. No bouncing typing indicators. Starter questions that guide visitors toward where the AI is genuinely strong. Every one of those is a product decision.

Building the AI was step one. Proving it works was step two. Most people skip step two.

I used Promptfoo to build 45 automated test cases. Voice consistency. Guardrails. Knowledge accuracy. Edge cases. Differentiation tests that validate the AI can’t be confused with a default assistant. Any time I update the corpus or tweak the system prompt, I run all 45 tests. Shipping an AI feature without systematic validation is just hoping for the best.

What This Actually Means

I didn’t write this to convince you to build a portfolio AI.

Your resume can’t convey the judgment you used when two priorities collided. It can’t show how you rebuilt trust with a team that had been burned by your predecessor. It can’t demonstrate how you think when a stakeholder changes direction mid-sprint.

So the real question is: what are you doing to close the gap between what your bullet points say and what you’re actually capable of?

Maybe that’s a portfolio that works harder than a PDF. Maybe it’s writing publicly about your real experiences. Maybe it’s building something that demonstrates you can ship, not just strategize. For me, it was all three at once. The portfolio AI is trained on my writing. My writing is informed by my building. My building proves I can do more than manage a backlog.

I’m not a software engineer. I built this using Cursor with Claude as my development partner. If you’ve been reading Product Party, you know I think product people should be building. This is what that looks like.

Ask about the e-notary growth story. Ask it how I handle messy backlogs. Ask it something weird and see what happens.

Your resume is 47 bullet points. Your career is a thousand stories. Figure out how to tell them.

Until next week,

Mike Watson @ Product Party

P.S. Want to connect? Send me a message on LinkedIn, Bluesky, Threads, or Instagram.

This is awesome, Mike! A great tool to display your capabilites (the tool itself and the content).